How does FaceApp: AI photo editor work?

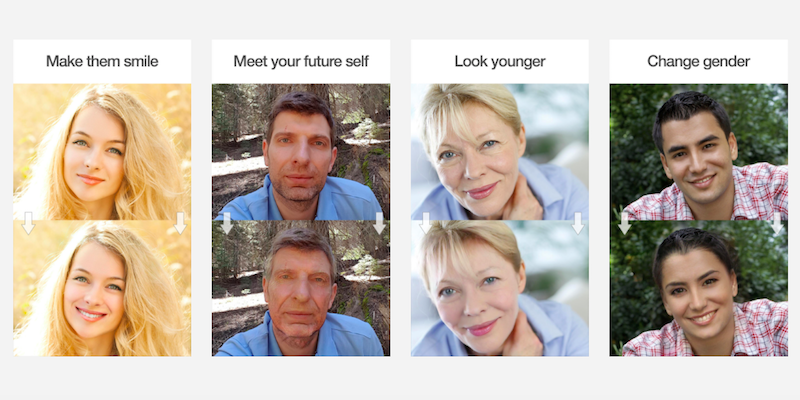

FaceApp is a free app that can be downloaded to your device from the AppStore or Google Play. Currently, there are 21 free filters in the basic version.

Recently, the app has gone viral again with a lot of celebrities posting pictures of their older selves created using the app. In fact, this has started an entirely new challenge called the FaceApp challenge on various social media channels like Twitter and Instagram.

Amidst all this virality, there is one question I am sure a lot of you might be asking right now.

How does this FaceApp work?

To be honest this question would occur in anyone's mind. Even more so because the app is doing such a tremendous job of creating life-like pictures. We tried contacting the developers to know more about this, but they did not give out any details. (Duhh).

Yes, we have figured it out!

Based on our analysis, the FaceApp would be utilizing the following technologies (or variants of the same):

- OpenCV

- CNN (Convolutional Neural Networks)

- GAN (Generative Adversarial Networks)

I'll explain how each of these fit perfectly to solve the puzzle that is the working of this application.

1. OpenCV

OpenCV ships out-of-the-box with pre-trained Haar cascades that can be used for face detection. Also, there is a “hidden” deep learning-based face detector that has been part of OpenCV since OpenCV 3.3.

These would make OpenCV a very good candidate for identifying and extracting the faces from an image. What's more, it does not take a lot to implement this in real-time on the live camera.

2. Convolutional Neural Networks (CNN)

This point could very well have been a subheading under OpenCV since the basic methodology used in the functionality implemented by OpenCV is CNN. However, I choose to keep it separate to highlight how CNNs are being used in conjunction with GANs in this particular app.

This makes CNNs the ideal choice for extracting features from images and identifying key areas in where the filters should ideally be applied.

Ultimately, if everything goes well, the Generator (more or less) learns the true distribution of the training data and becomes really good at generating real-looking cat images. The Discriminator can no longer distinguish between training set cat images and generated cat images.

Note that the three things listed above are not all that the creators would have needed to develop the app. Obviously, there would be other things - knowledge of Android App development, servers to do the image processing and filtering in real-time, the creativity to make filters suiting to different face types. There is a very good chance that one entire model could have been put to work for making up all the different filters - wrinkles, beards, changed hair, and eyebrow colors.

3. Generative Adversarial Networks (GAN)

This is where the biggest powers of the app lie. The GAN is what gives it the power to be able to produce images that are so close to real selves.

For the last couple of years, GANs have created quite a stir in the Deep Learning community. This is because of various demonstrations of their capabilities. The idea is to pit two neural networks against each other ("adversarial") where one tries to outmatch the other.

GANs or Generative Adversarial Networks are Deep Neural Networks that are generative models of data. What this means is, given a set of training data, GANs can learn to estimate the underlying probability distribution of the data. This is very useful because apart from other things, we can now generate samples from the learned probability distribution that may not be present in the original training set.

The two competing Neural Networks are called Generator and Discriminator.

- Generator - The generator is a kind of neural system that creates fresh cases of an item.

- Discriminator - The discriminator is a sort of neural network that determines the identity of a generator or whether it resides in a dataset

What’s going on between the generator and the discriminator here is a 2 player zero-sum game. In other words, in every move, the generator is trying to maximize the chance of the discriminator misclassifying the image and the discriminator is, in turn, trying to maximize its chances of correctly classifying the incoming image.

Ultimately, if everything goes well, the Generator (more or less) learns the true distribution of the training data and becomes really good at generating real-looking cat images. The Discriminator can no longer distinguish between training set cat images and generated cat images.

Note that the three things listed above are not all that the creators would have needed to develop the app. Obviously, there would be other things - knowledge of Android App development, servers to do the image processing and filtering in real-time, the creativity to make filters suiting to different face types. There is a very good chance that one entire model could have been put to work for making up all the different filters - wrinkles, beards, changed hair, and eyebrow colors.

Also, even if all of the individual components have been arranged for, piecing them together is an issue in itself. All of this makes me applaud the developers for the work they have done.

Hope you learned something from this post.

Cheers!

Comments

Post a Comment